Creating Chatbots in African Languages

The field of natural language processing (NLP) has advanced the furthest in the most widely-used languages like English and Russian. But an emerging body of research is focused on training AI models using African languages.

Thanks to such efforts, the dream of an African language chatbot is edging closer to reality.

Chatbot Research Dominated by English Language

Natural language processing and the large language models that power chatbots like ChatGPT are still relatively new technologies. And to date, research and development has focused on the most spoken languages.

For example, ChatGPT is available in English, Spanish, French, German, Portuguese, Italian, Dutch, Russian, Arabic, and Chinese.

The tendency toward language dominance in AI research is largely driven by data availability.

It is estimated that over half of all written content available online is in English. Accordingly, of the datasets needed to train language models, the largest and most readily available are in English, followed by the other most popular languages.

African Languages Pose a Challenge for AI Researchers

Currently, the world’s largest AI firms are battling it out to build the most advanced chatbots for a handful of languages. But another sphere of research is looking to develop AI tools for less popular languages.

For African languages, the limited availability of training data presents a significant challenge for AI developers.

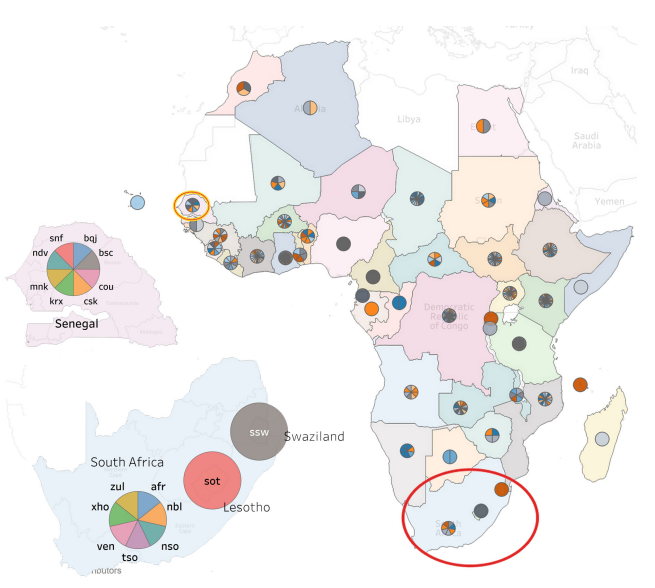

The linguistic diversity of many African countries further complicates things. For example, South Africa has 11 official spoken languages, and there are thirty-five languages indigenous to the country. With around 2000 languages in use on the continent, amassing vast digital content libraries on an equivalent scale to English would be nearly impossible

Moreover, one recent study identified the lack of basic digital language tools as a factor that inhibits content creation. As the authors observed:

“Creating digital content in African languages is frustrating due to a lack of basic tooling such as dictionaries, spell checkers, and keyboards.”

Nevertheless, efforts are underway to increase the availability of African language data, for instance, by digitizing archival language repositories and making more datasets freely accessible. The work of content creators, curators, and translators is also critical.

Multilingual Models Could Make African Language Chatbots a Reality

Although lacking training data has certainly held African language NLP research back, multilingual pre-trained language models (mPLMs) could help researchers overcome this challenge.

Pre-trained models can be thought of as the building blocks of high-functioning chatbots. However, they still require task-specific fine-tuning in order to deliver conversational outputs.

By acquiring generalizable linguistic information during pretraining, multilingual models are able to interpret the basic structure and outline of related languages without the massive training datasets normally required.

Unsurprisingly, one recent study has shown that language similarity improves model performance. Just like speakers of related languages can often understand each other, models trained with one language can interpret similar languages accurately.

Using this approach, researchers developed an mPLM they called SERENGETI, which covers 517 African languages and language varieties.

This represents a major technological leap forward and a significant improvement on the 31 previously covered African languages.

Disclaimer

In adherence to the Trust Project guidelines, BeInCrypto is committed to unbiased, transparent reporting. This news article aims to provide accurate, timely information. However, readers are advised to verify facts independently and consult with a professional before making any decisions based on this content.

[ad_1]

Source link

[ad_2]